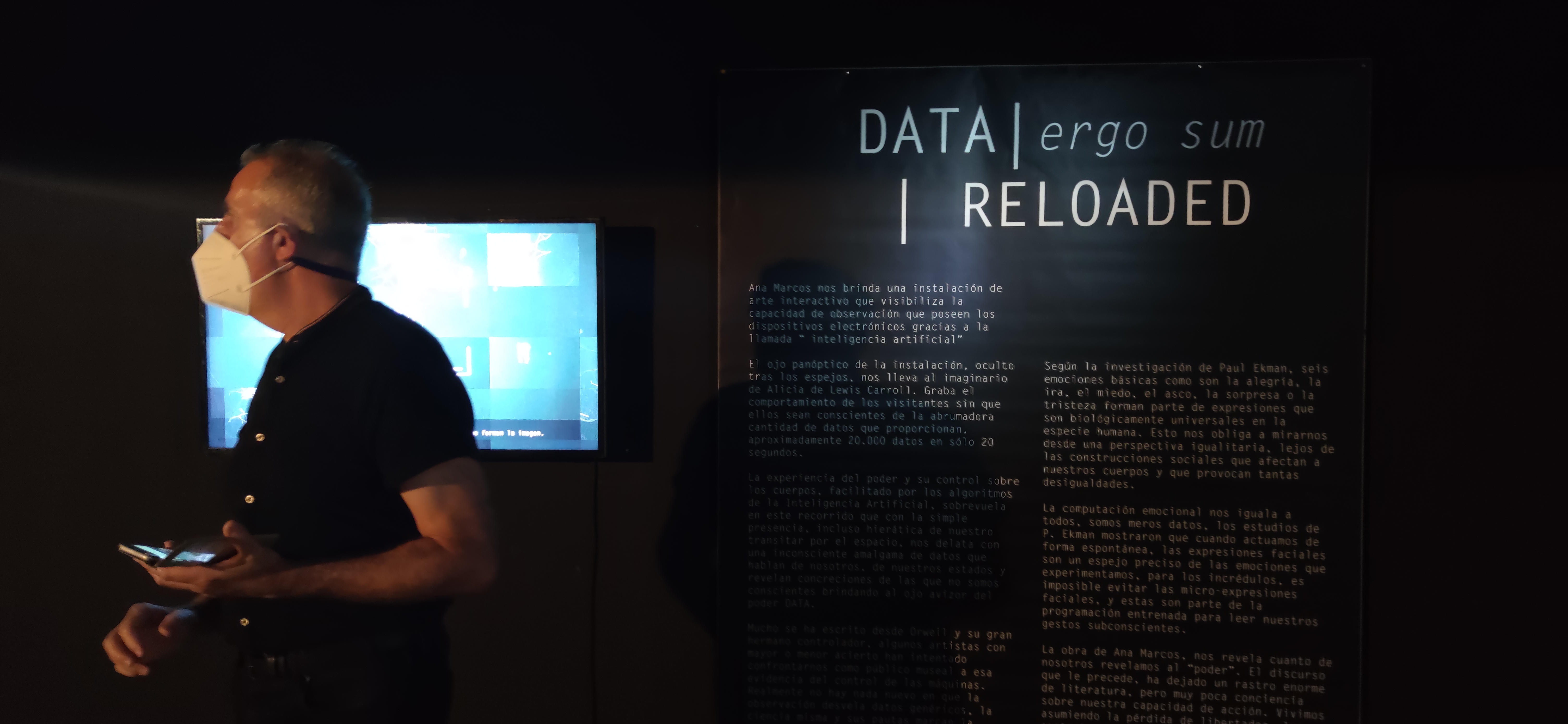

DATA | ergo sum | RELOADED is an interactive art installation that visualizes the visual capabilities of machines that use artificial intelligence. With mirrors that focus visitors’ attention, the installation observes and records participants’ behavior without them realizing that their simple presence in front of a mirror is providing an overwhelming amount of data: around 20,000 parameters are captured in just 20 seconds.

Developments in Computer Vision and Artificial Intelligence techniques have given machines the ability to obtain data from us without our active participation. This fact is presented to visitors through an artistic visualization of a portrait that combines their images with their personal big data.

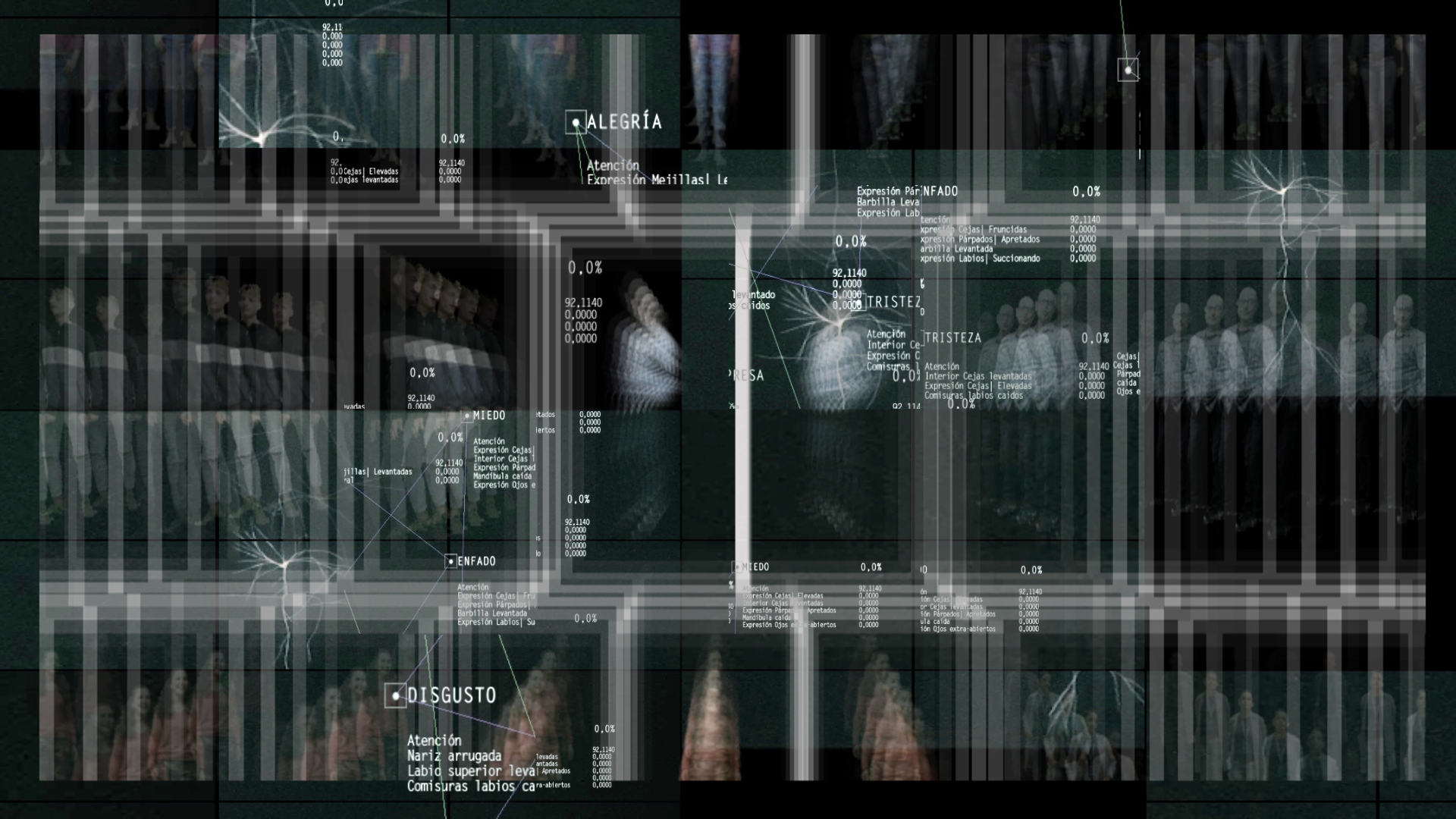

How do machines see and understand us? Essentially, this artistic project presents a different kind of digital portrait: instead of being a static image representing a moment or the romantic idea of conveying the essence of an individual, it consists of a complex visual system in which computational technology provides a different perspective. It is precisely these ways of seeing—of seeing ourselves—that DATA | ergo sum invites us to review in front of its AI system. The challenge begins with the part that most distinguishes humans: the face, and then ends with the body. This digital analysis, which makes us legible to a machine, opens new possibilities. We look at unknown data, risking recognizing both our vulnerability and the unrepresentable or speculative aspects of human life.

The installation is presented in two distinct spaces. In the first, called the Observation Room, two circular mirrors stand out. Cameras, sensors, and other technological elements are placed in the background so as not to distract attention from the mirrors and their reflection. Synthesized AI voice messages draw visitors’ attention, but no instructions are provided—only a marked indication on the floor labeled “observation area.” The mirrors reflect visitors’ images while the cameras record the participant in real time for a few seconds.

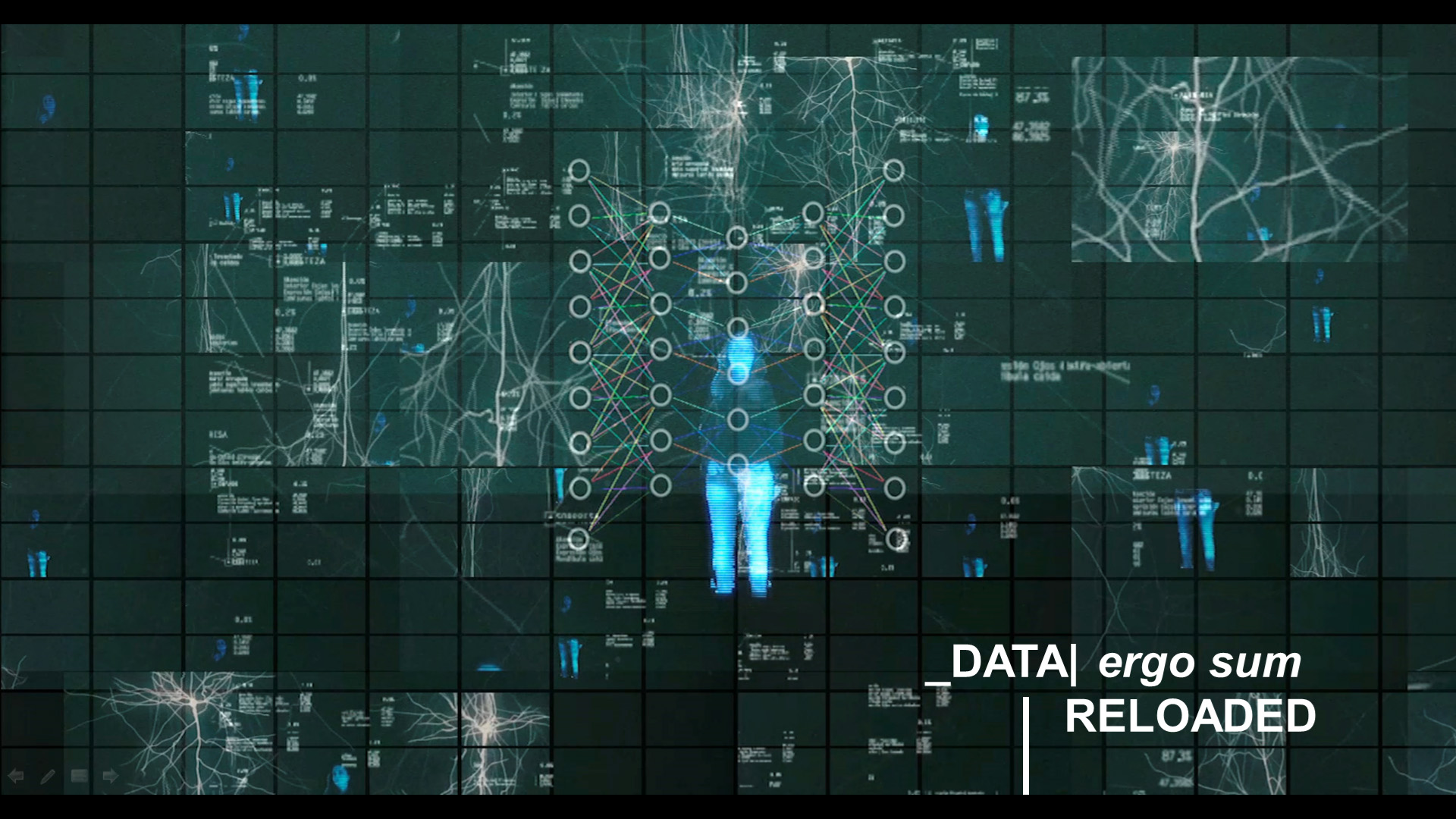

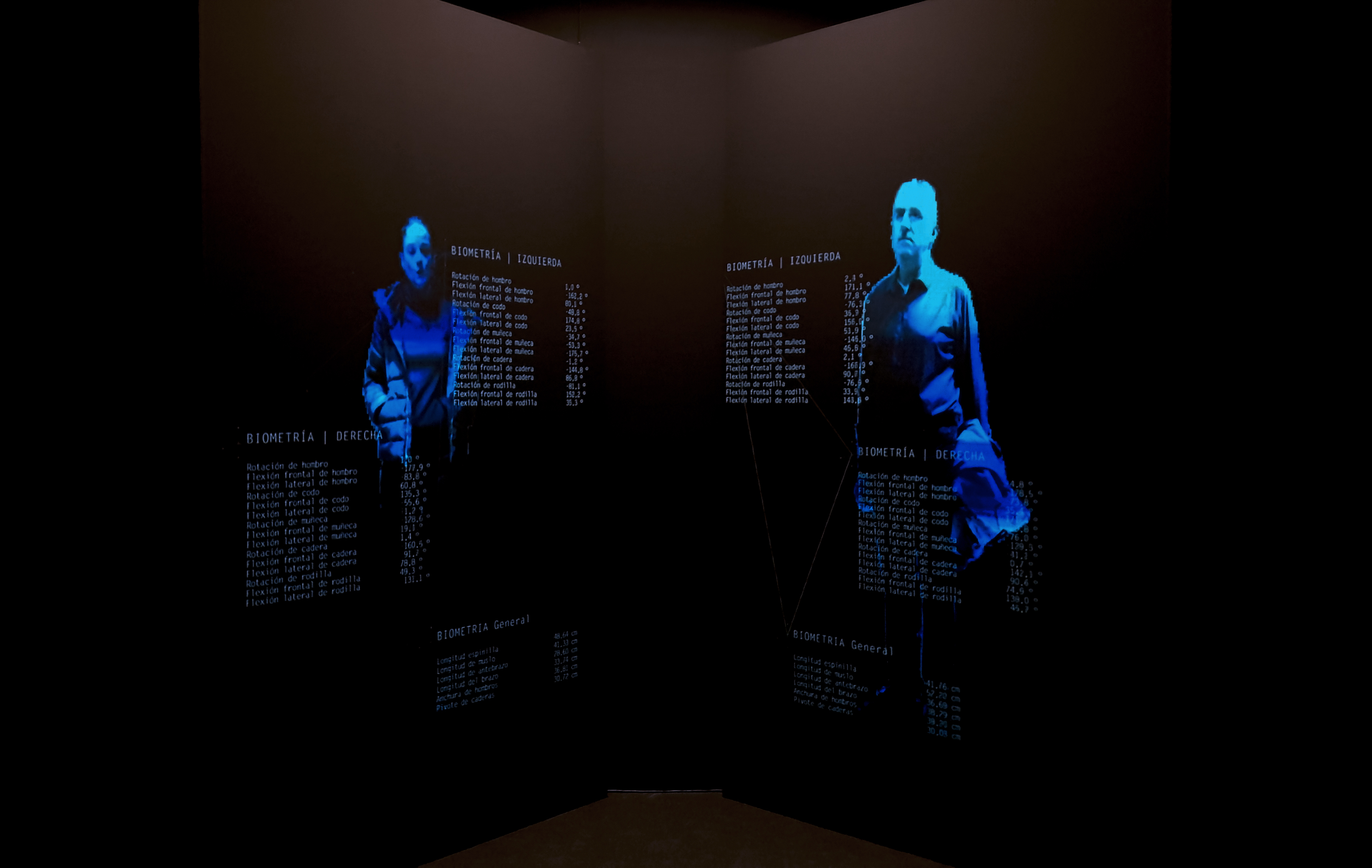

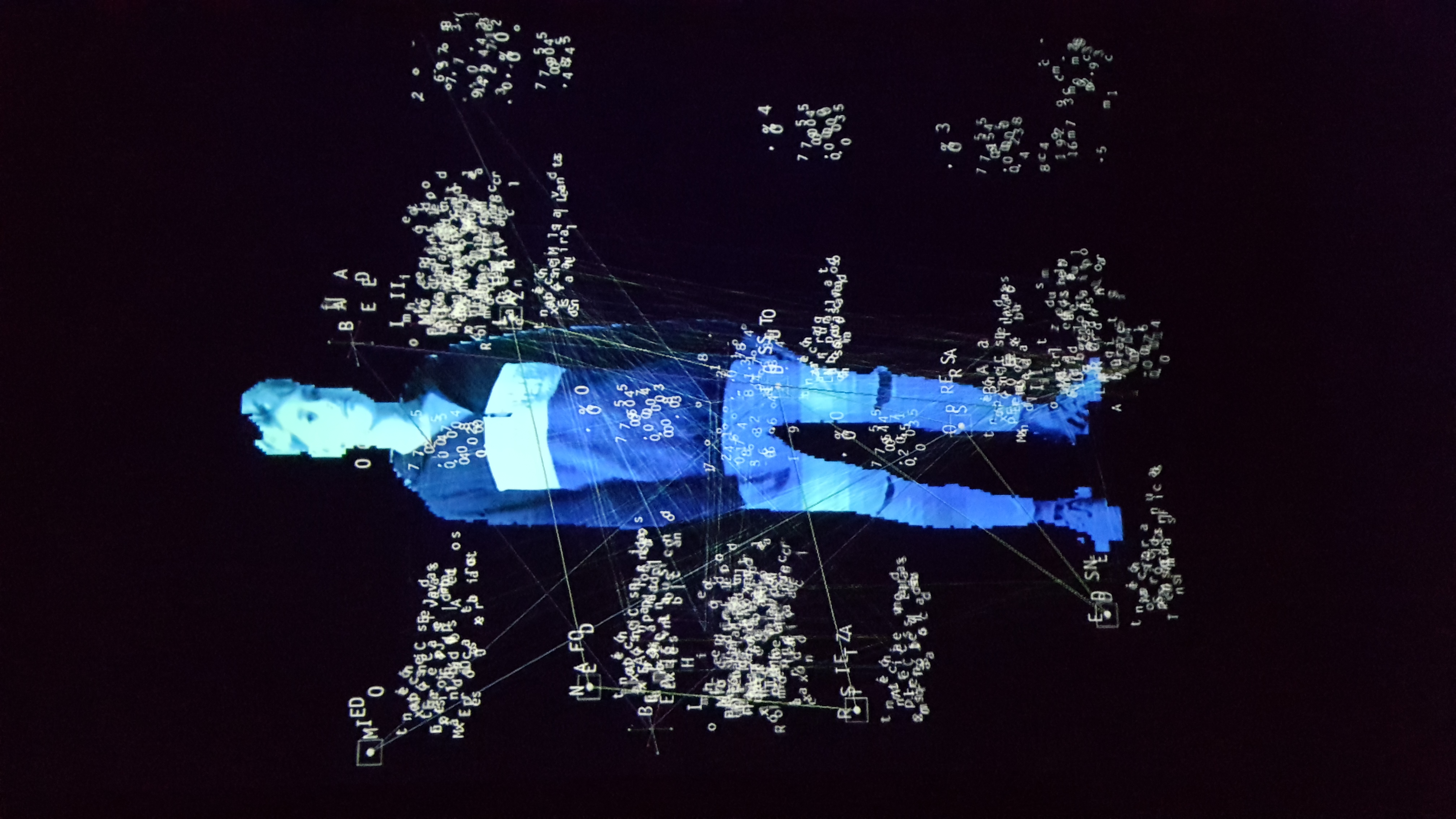

The second space is the Projection Room. The final result is a “holographic” video that shapes a digital portrait of the participants. The video is shown life-size, and the captured data appear floating around the participant, moving and evolving, showing the connections that form a network that somehow simulates neural networks.

These “personal big data” are processed by AI algorithms that interpret the raw information by adding layers of value, such as basic emotions from facial expressions or biometric body parameters (based on the scientific articles of psychologist Paul Ekman). The installation captures a total of 21 facial expressions, 39 parameters related to body expression, 10 biometric measurements, and deduces 7 emotions from facial expressions. In summary, an “AI mirror” system capable of parameterizing facial expressions and physical traits to project information in a sequence, reducing each individual to a stream of information.